Using Large Language Models (LLMs) securely in the enterprise

From AI Hype to Practical Implementation of Large Language Models

How can companies optimize large language models while keeping their sensitive data in-house? The goal of this article is to demonstrate approaches for analyzing unstructured, in-house data while meeting data protection requirements.

Index

ChatGPT was an eye opener

The introduction of ChatGPT not only opened a new chapter in Artificial Intelligence (AI) in the general perception, some authors even talk about the fifth industrial revolution. One of the most exciting developments in this area is the use of Large Language Models (LLMs), such as OpenAI’s GPT3.5, to automate and improve natural language processing tasks. In the digital age, the use of artificial intelligence and machine learning is critical for businesses to remain competitive.

From AI hype to practical implementation

But how can companies optimize these models while keeping their sensitive data in-house? The goal of this article is to highlight approaches for analyzing unstructured, in-house data while meeting data privacy requirements.

Large language models are trained over years with unimaginably large amounts of data and enormous computational effort. The principle behind this: With the help of algorithms, stored words are searched in an n-dimensional vector space according to the principles of statistics and probability. Thus, word by word, the next matching word to the question is found and assembled as the answer…. LLMs are thus powerful tools that can understand and generate natural language. However, these models cannot function optimally “out of the box.” They do not have a mind of their own or true understanding of the world like a human brain.

Internal data as the key to success

Success in optimizing LLMs in enterprises starts with internal domain knowledge. Employees in various departments have a deep understanding of business processes, target audiences, and specific requirements. This knowledge is invaluable when customizing LLMs. To achieve the best results, they must be adapted to a company’s specific context and individual requirements. Fine-tuning and Retrieval Augmented Generation (RAG) are two methods to improve the performance of Large Language Models (LLMs):

- Fine-Tuning is a process of training an existing LLM to perform a specific task by tuning it with specific data. However, fine-tuning requires a large amount of data to be effective.

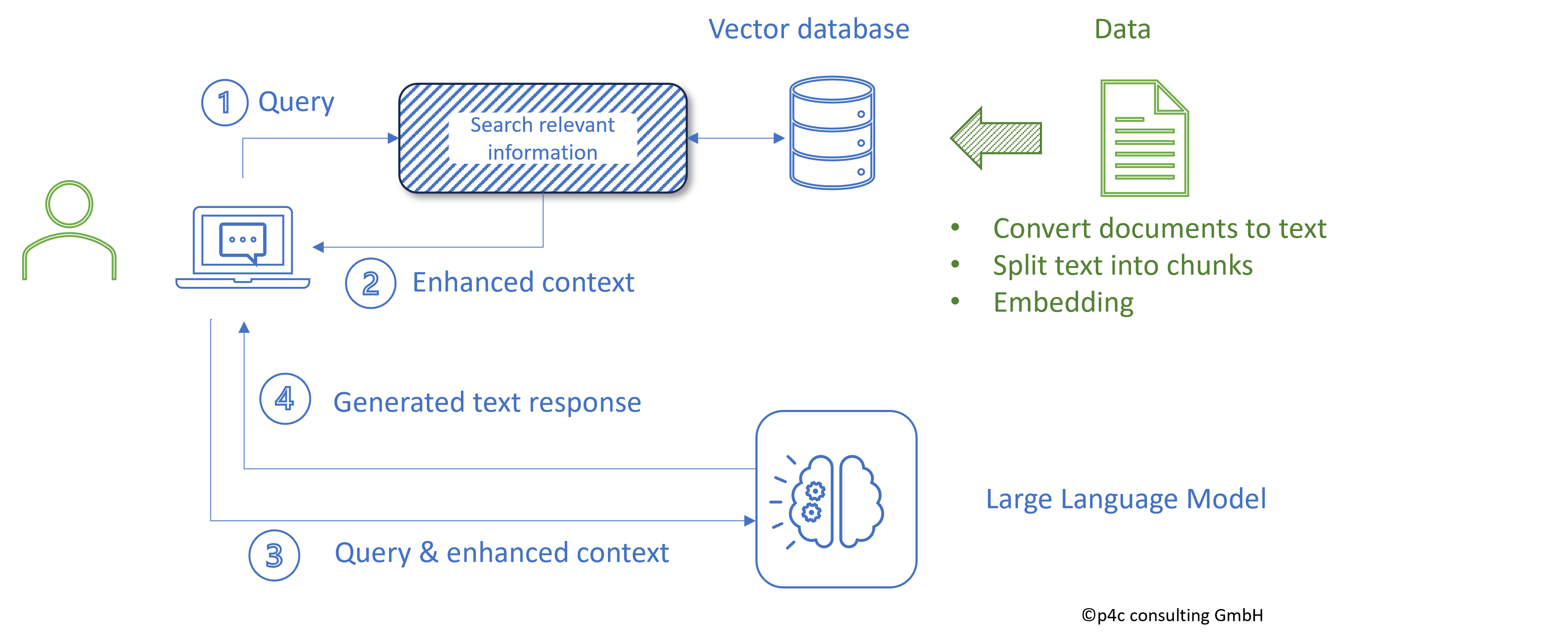

- Retrieval Augmented Generation (RAG) [i] on the other hand, uses external knowledge sources to improve the quality of the answers generated by the LLM. RAG is based on an information retrieval system that relies on databases. The LLM is then used to process the retrieved information and generate the answer.

Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) currently represents a promising way to securely integrate proprietary data into an LLM. This takes into account the limited amount of content per query (token length). In each case, the own document fragments relevant for answering the question are determined in an upstream own AI model – and these fragments are delivered to the LLM as an augmented context with the query.

- The query is used to search for relevant information in the vector database using a similarity search.

- Relevant information is added to the query.

- The extended query is passed to the LLM.

- The LLM generates a company-specific response to the query.

(See Fig. 1)

Vector databases as a key tool

Vector databases are an advanced technology that enables organizations to efficiently organize and retrieve internal unstructured data. A major advantage of vector databases is their ability to capture semantic relationships between concepts. They use vector representations of text data to enable efficient similarity search and analysis.

First, the various document formats with their unstructured data are read in. These can be, for example, PDFs, various text formats (.docx, .txt) or even web texts (.html). The text of a read-in document is then broken down into so-called chunks, which can comprise hundreds to several thousand characters. To ensure that the context or meaning of a text is not lost or distorted, the chunks are overlapped by a few characters. Embedding is now about making the texts of the chunks comparable with other texts or chunks. This is done by converting the texts into respective unique numeric vectors. For this purpose, so-called embedding models are used. There are a number of open source embedding models that can be used. Some of the best known models are:

- BGE

- FastText

- Word2Vec

- GloVe

- Sent2Vec

Ensure data privacy and security

A decisive factor in the optimization of LLMs in companies is the protection of sensitive data. As important as it is to keep internal specialist and domain knowledge within the company: It must be done in a secure way that is privacy compliant and meets regulatory requirements. One way to ensure this is to use vector databases and open source LLMs that run locally on a server or in a secured cloud. Some vector databases are listed below:

- Milvus

- Pinecone

- Qdrant

- Chroma

- Weaviate

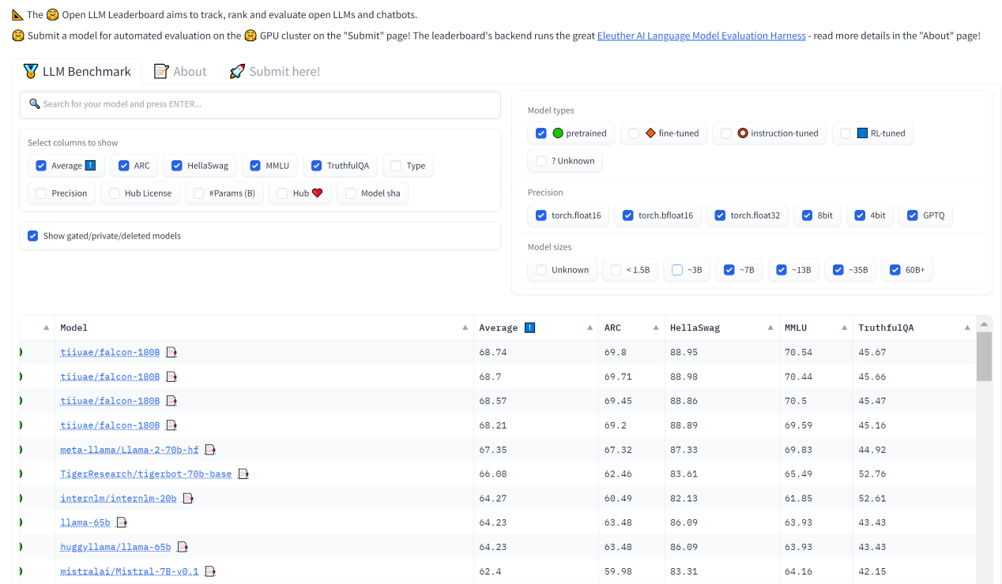

Open Source Large Language Models

To avoid data exchange with the big technology players like OpenAI or Google, the local use of open source large language models is a good choice. Currently, a strong open source community is developing. Meta was the first company to make its AI model available to the AI community. Numerous variants have since emerged. For example, Paris-based AI startup Mistral AI, which raised over 100 million euros just five weeks after its founding, unveiled its first open source large language model in September 2023.[i] Whereby “large” is relative here, because the model has seven billion parameters and is thus rather modest in size. The number of parameters is a decisive criterion for comparing language models. In general, the bigger the better. Larger models can process more data, learn more, and produce better results. The current top model, GPT-4, is said to have a trillion parameters. However, larger models require more computing power, which makes them more expensive to develop and implement. In the leaderboard of open-source LLMs published by the community website huggingface.com, the “Mistral-7B” model is already in 10th place (as of Oct. 03, 2023).

Continuous improvement

Optimizing LLM is not a one-time process. It requires continuous enhancement of the model with new business data to ensure it remains current and effective. Above all, continuous monitoring of the quality of LLMs is hugely important, as the Stanford/Berkeley study “How Is ChatGPT’s Behavior Changing over Time?” showed in August: Researchers:inside compared GPT-4 and GPT-3.5 – and found quality variations in both versions.[ii]

In addition, there are several tools and libraries that can help B2B companies use LLMs and vector databases, such as LangChain. LangChain is a Python library that can be helpful in processing LLMs and vector databases. It offers a variety of functions, from text generation to text analysis – and allows developers to create complex applications with LLMs.

Organizations should carefully review the available options and select those that best meet their specific needs.

Conclusion

The use of open source large language models offers companies enormous potential for analyzing unstructured, internal company data. However, the key to successful optimization lies in the company’s internal domain knowledge and in the efficient management and integration of this data using vector databases.

It is critical for executives to recognize the potential of LLMs in their organizations and take the necessary steps to realize that potential. This will not only improve the efficiency and quality of their applications, but also provide a valuable competitive advantage.

[i] https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard accessed 03.10.2023

[ii] https://arxiv.org/pdf/2307.09009.pdf accessed 04.10.2023

[i] https://www.searchenginejournal.com/mistral-ai-launches-open-source-llm-mistral-7b/497387/#:~:text=Mistral%20AI%2C%20a%20burgeoning%20startup,alternative%20to%20current%20AI%20solutions. accessed 04.10.2023

[i] https://learn.microsoft.com/de-de/azure/machine-learning/concept-retrieval-augmented-generation?view=azureml-api-2 accessed 03.10.2023

Comments are closed.